Artificial intelligence continues to achieve remarkable feats, yet a peculiar trend has emerged—AI models can't seem to cross a specific performance boundary.

This phenomenon raises fundamental questions about the nature of intelligence, computation, and the limits of machine learning.

Let’s delve into the scaling laws governing AI development and explore what they might mean for the future of the field.

The Line That Can't Be Crossed

Neural Scaling Laws: A Universal Trend

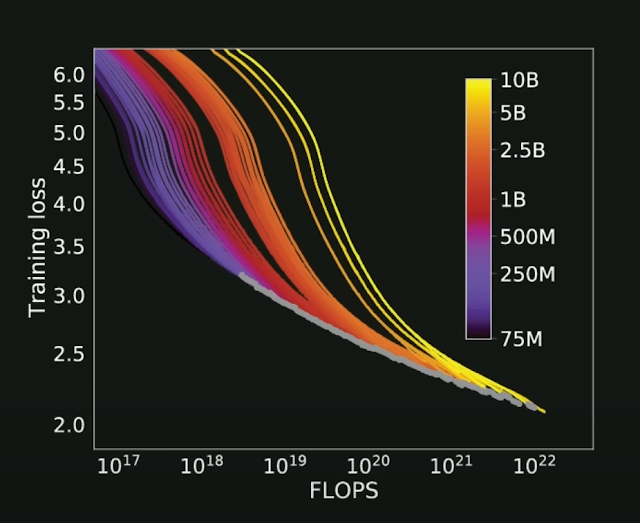

In 2020, OpenAI revealed three fundamental scaling laws that link error rates to:

1. Compute

2. Model size

3. Dataset size

These laws are strikingly consistent across tasks, including language, image, and video modeling.

They are largely agnostic to model architecture, as long as reasonable choices are made.

The implications are profound: these laws may represent an underlying principle akin to a physical law, dictating the behavior of AI systems.

Is This a Fundamental Law?

The core mystery is whether these trends reflect a universal law or simply the limitations of neural networks as we currently conceive them.

If scaling laws are fundamental, they might offer blueprints for constructing AI systems.

Alternatively, they could be the result of current flawed methodologies, leaving open the possibility of breakthroughs with entirely new paradigms.

Scaling to ChatGPT-3 and Beyond

OpenAI’s groundbreaking work with GPT-3 exemplifies the power of these laws. Released in mid-2020, GPT-3 featured 175 billion parameters and required over 3,640 petaflop-days of compute to train. Remarkably, its performance adhered closely to predictions made using scaling laws earlier that year, demonstrating their predictive power.

GPT-3’s success also underscored the trend that larger models lead to better performance—so long as sufficient compute and data are available. This realization informed OpenAI’s strategy to invest heavily in scale, culminating in ChatGPT-4. Despite its astronomical training cost, reportedly over $100 million, GPT-4 had success with the same scaling laws.

Why Can’t We Reach Zero Error?

Even with near-limitless resources, error rates seem unable to reach zero. This is partly due to the entropy of natural language—the inherent uncertainty and variability in human text. For example, the phrase “A neural network is a…” has many plausible continuations, none of which are definitively correct. Models like ChatGPT are trained to predict probabilities across these options, and their error rates reflect this irreducible uncertainty.

Emergent Abilities and Scale

An intriguing phenomenon worth discussing is how certain capabilities seem to emerge suddenly at specific scale thresholds. For instance, abilities like arithmetic or complex reasoning don't gradually improve with scale but appear to "switch on" at certain model sizes. This observation challenges the smoothness implied by scaling laws and suggests a more complex relationship between model scale and capabilities.

The Role of Cross-Entropy Loss

AI training relies heavily on cross-entropy loss, a metric that quantifies how well a model predicts the correct outputs. This loss function grows exponentially as confidence in incorrect answers increases, ensuring models are penalized heavily for poor predictions. Cross-entropy loss underpins the scaling laws, connecting performance improvements to increases in model size, compute, and data.

The Data Quality Frontier

As models grow larger, the quality and diversity of training data becomes increasingly critical. There's emerging evidence that we're approaching the limits of high-quality, publicly available data. This "data wall" might represent a more immediate boundary than computational limits, suggesting that future advances may require novel data curation and generation strategies.

Challenges and Future Directions

While scaling laws offer a powerful framework, they also highlight the growing challenges of building larger models. The cost, energy consumption, and infrastructure demands escalate rapidly. Moreover, questions remain about the true nature of these trends:

- Are we nearing the limits of neural networks?

- Could new architectures break free from the compute-optimal frontier?

What Comes Next?

To answer these questions, the field needs more than empirical observations—it requires theoretical breakthroughs. Emerging research suggests that scaling laws may stem from how deep learning models resolve high-dimensional data manifolds, but much work remains to validate and expand these theories.

As we push the boundaries of AI, scaling laws serve as both a guide and a challenge. They offer a tantalizing glimpse into the structure of intelligence but also remind us of the hurdles we must overcome to unlock the full potential of machine learning.

Conclusion

The discovery of neural scaling laws marks a turning point in our understanding of AI. Whether they represent a universal principle or a transient limitation, they have reshaped how we think about building intelligent systems. As researchers and engineers, we stand at the frontier of this knowledge, striving to uncover the deeper truths that govern the remarkable machines we create.

Neural scaling law: https://en.wikipedia.org/wiki/Neural_scaling_law

AI can't cross this line and we don't know why. - Welch Labs: https://www.youtube.com/watch?v=5eqRuVp65eY

No comments:

Post a Comment